There’s a myth in the industry that performance penalties apply as soon as you virtualise. This may have been true some time ago but we’d argue that modern technology implementations are eroding this out-of-date view!

As part of the work we are doing with a stock exchange facility, we have been investigating the implications of moving towards a software defined virtualised environment. The organisation in question was interested in combining their development and production environment into a single on-premise cloud to ease management and enable fast internal development of features.

Their existing setup had two separate platforms which meant migrating applications between environments was a little more time-consuming than perhaps it should have been. The performance in their test environment was also significantly different to the production environment as a result of how the existing virtualised infrastructure was implemented.

Our goal was to explore SR-IOV and physical function passthrough on the customer preferred NIC vendor (which happened to be Solarflare).

The test environment consisted of two servers each directly connected to one another (without a switch). We investigated the performance on multiple generations of CPUs with ranging frequencies to observe the impact of frequency on low-latency, small-packet traffic. We were also fortunate enough to get our hands on some overclocked HFT servers which we populated with the Solarflare XtremeScale SFC9250. The tests would investigate the bare metal performance of this environment and then we would reproduce the test running virtualised on top of OpenStack (KVM) on the same hardware.

To begin we setup the Solarflare drivers and set the base environment (note: environment was Centos7 minimal to begin with)

# Install dependancies and SF modules

yum -y groupinstall 'Development tools'

yum -y install kernel-devel wget numactl

# install openonload

wget https://openonload.org/download/openonload-201811-u1.tgz

tar zxvf openonload-201811-u1.tgz

./openonload-201811-u1/scripts/onload_install

# get the sfntest too (Solarflare net test)

wget https://www.openonload.org/download/sfnettest/sfnettest-1.5.0.tgz

cd sfnettest-1.5.0/src

make

# note to check and see what firmware is running just: sfboot

# to factory reset: sfboot -i enp139s0f0 --factory-reset (x2 card)

# change the firmware to full feature (need this when using passthrough for VMs)

[root@solarflare01 Solarflare]# sfboot --adapter=enp139s0f0 firmware-variant=full-feature

# set the low latency firmware (use this when doing perf tests)

[root@solarflare01 ~]# sfboot --adapter=enp139s0f0 firmware-variant=ultra-low-latency

# note: cold power cycle just run

ipmitool power cycle

With the above in place on both servers we can start to look at the tuning. As the servers we are using are dual socket (2 physical CPUs, each with multiple local cores and PCI connectivity) we need to be careful with numa placement of the workloads. For latency tests we want to run the benchmarks on the cores with the closest proximity to the NIC. There’s a few tools we can use to help us identify the cores and devices per numa node.

These initial tests were also on some older hardware where the CPU used was Intel E5-2620v4 which runs at 2.1Ghz (so these are worst case results for the SF NIC)

# what numa node is the interface connected to

cat /sys/class/net/enp139s0f0/device/numa_node

# what cores are in that numa node

cat /sys/devices/system/node/node1/cpulist

# run latency tests (based on ultra-low-latency profile) in this instance core 8 was connected directly to the SF NIC.

# on one node run

[zippy@solarflare02 src]# onload --profile=latency taskset -c 8 ./sfnt-pingpong

# then on the other node

[zippy@solarflare02 src]# onload --profile=latency taskset -c 8 ./sfnt-pingpong --affinity "1;1" tcp 192.168.10.5

oo:sfnt-pingpong[21133]: Using OpenOnload 201811-u1 Copyright 2006-2019 Solarflare Communications, 2002-2005 Level 5 Networks [0]

# cmdline: ./sfnt-pingpong --affinity 1;1 tcp 192.168.10.5

# version: 1.5.0

# src: 8dc3b027d85b28bedf9fd731362e4968

# date: Fri 19 Jul 14:27:10 BST 2019

# uname: Linux solarflare02.testing 3.10.0-957.21.3.el7.x86_64 #1 SMP Tue Jun 18 16:35:19 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

# cpu: model name : Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz

# lspci: 05:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 05:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 8b:00.0 Ethernet controller: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller (rev 01)

# lspci: 8b:00.1 Ethernet controller: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller (rev 01)

# enp139s0f0: driver: sfc

# enp139s0f0: version: 4.15.3.1011

# enp139s0f0: bus-info: 0000:8b:00.0

# enp139s0f1: driver: sfc

# enp139s0f1: version: 4.15.3.1011

# enp139s0f1: bus-info: 0000:8b:00.1

# enp5s0f0: driver: igb

# enp5s0f0: version: 5.4.0-k

# enp5s0f0: bus-info: 0000:05:00.0

# enp5s0f1: driver: igb

# enp5s0f1: version: 5.4.0-k

# enp5s0f1: bus-info: 0000:05:00.1

# ram: MemTotal: 65693408 kB

# tsc_hz: 2099969430

# LD_PRELOAD=libonload.so

# onload_version=201811-u1

# EF_TCP_FASTSTART_INIT=0

# EF_POLL_USEC=100000

# EF_TCP_FASTSTART_IDLE=0

# server LD_PRELOAD=libonload.so

# percentile=99

#

# size mean min median max %ile stddev iter

1 1768 1676 1719 50581 2166 158 842000

2 1729 1680 1720 21333 1839 84 862000

4 1730 1681 1720 20046 1844 92 861000

8 1731 1682 1722 20967 1850 92 861000

16 1739 1684 1730 19944 1867 89 857000

32 1752 1694 1740 10220 1877 90 851000

64 1801 1745 1791 21847 1931 97 828000

128 1893 1824 1875 14862 2029 98 788000

256 2074 1930 2069 27097 2236 111 719000

512 2300 2176 2289 11293 2469 103 649000

1024 2606 2475 2585 23790 2919 141 573000

2048 3857 3521 3829 13734 4398 191 388000

4096 4664 4401 4622 22394 5181 186 321000

8192 6547 6214 6491 15511 7508 240 229000

16384 9997 9540 9946 28038 11050 293 150000

32768 16476 15478 16419 34062 18176 432 91000

65536 29156 27571 29151 54446 31152 647 52000

These are our baseline figures to start with. The numbers above confirm that our systems are capable of around 1.7usec (microseconds) These are the bare metal servers and the numbers to beat when we compare our virtualised setup.

Out of curiosity, we wanted to understand what sort of impact the Solarflare firmware type on performance. Let’s switch the full feature firmware back on and take a look…

# redo with the standard full feature firmware

$ sfboot firmware-variant=full-feature

$ ipmitool power cycle

[root@solarflare01 src]# sfboot | grep -i variant

Firmware variant Full feature / virtualization

Firmware variant Full feature / virtualization

# size mean min median max %ile stddev iter

1 2248 2022 2071 4200847 3239 6349 663000

2 2209 2022 2075 889552 3158 3298 675000

4 2207 2018 2078 865345 3132 3096 676000

8 2199 2035 2093 4827169 2692 6848 678000

16 2241 2045 2092 219934 3027 3539 666000

32 2230 2038 2102 4414673 3319 6302 669000

64 2244 2064 2128 4589940 3179 6427 665000

128 2269 2105 2170 185278 3305 1587 658000

256 2522 2220 2366 4359659 4037 6899 592000

512 2588 2329 2460 1322388 3893 3480 577000

1024 2822 2529 2614 4219115 8031 6869 530000

2048 3973 3566 3742 4108063 8391 7989 377000

4096 5064 4480 4745 270765 11188 4253 296000

8192 7103 6283 6700 4363507 13410 11234 211000

16384 11054 9628 10243 1432292 18087 10285 136000

32768 18228 15645 16971 4225292 34181 16490 83000

65536 30909 27770 29450 4078629 66407 22086 49000

You’ll see that’s quite a jump from 1.7usec up to 2.2usec. Certainly worth keeping in mind for latency sensitive applications.

That’s the baseline numbers recorded – we now move on to the testing in our OpenStack environment. For this we are leveraging SR-IOV, PCI passthrough and the support for physical functions in the SF NIC. Let’s run through the steps to set this up.

# enable 4 physical functions per single SF NIC

sfboot switch-mode=pfiov pf-count=4

# we need to update the kernel to support intel_iommu

grubby --update-kernel=ALL --args="intel_iommu=on"

grubby --info=ALL

# reboot

Now let’s check the PCI entries on the server and collect the PCI IDs as we’ll need these to pass to the nova configuration in OpenStack for PCIPassthrough.

# lets get the ID's of the devices to pass-through

[root@solarflare02 ~]# lspci -nn | grep -i Solarflare | head -n 1

8b:00.0 Ethernet controller [0200]: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller [1924:0b03] (rev 01)

There’s a few parameters we need to input in to OpenStack to instruct it to use these devices rather than the standard virtual NIC.

# nova.conf

[filter_scheduler]

enabled_filters = RetryFilter, AvailabilityZoneFilter, RamFilter, ComputeFilter, ComputeCapabilitiesFilter, ImagePropertiesFilter, ServerGroupAntiAffinityFilter, ServerGroupAffinityFilter, PciPassthroughFilter

available_filters=nova.scheduler.filters.all_filters

# these IDs are taken from the lspci command above

[pci]

passthrough_whitelist={"vendor_id":"1924","product_id":"0b03"}

alias={"name":"SolarFlareNIC","vendor_id":"1924","product_id":"0b03"}

# reconfigure / restart services as required

# flavor setup

openstack flavor create --public --ram 4096 --disk 10 --vcpus 4 m1.large.sfn

openstack flavor set m1.large.sfn --property pci_passthrough:alias='SolarFlareNIC:1'

# spin up VM

openstack server create --image centos7 --flavor m1.large.sfn --key-name mykey --network demo-net sfn1

# repeat for second virtual server placing it on the other physical system using availability zones

Ok we are now ready to perform a repeat of the tests from earlier. We re-install all the drivers and benchmark tools as documented above and run sfnt again.

# results within a VM

[zippy@sfn1 src]# onload --profile=latency ./sfnt-pingpong --affinity "1;1" tcp 192.168.10.1

onload: Note: Disabling CTPIO cut-through because only adapters running at 10GbE benefit from it

oo:sfnt-pingpong[20195]: Using OpenOnload 201811-u1 Copyright 2006-2019 Solarflare Communications, 2002-2005 Level 5 Networks [0]

# cmdline: ./sfnt-pingpong --affinity 1;1 tcp 192.168.10.1

# version: 1.5.0

# src: 8dc3b027d85b28bedf9fd731362e4968

# date: Tue Jul 30 23:26:52 UTC 2019

# uname: Linux sfn8.novalocal 3.10.0-957.21.3.el7.x86_64 #1 SMP Tue Jun 18 16:35:19 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

# cpu: model name : Intel Core Processor =191aa(Broadwell)

sh: /sbin/lspci: No such file or directory

# eth0: driver: virtio_net

# eth0: version: 1.0.0

# eth0: bus-info: 0000:00:03.0

# eth1: driver: sfc

# eth1: version: 4.15.3.1011

# eth1: bus-info: 0000:00:05.0

# ram: MemTotal: 3880088 kB

# tsc_hz: 2099966200

# LD_PRELOAD=libonload.so

# onload_version=201811-u1

# EF_TCP_FASTSTART_INIT=0

# EF_CTPIO_SWITCH_BYPASS=1

# EF_POLL_USEC=100000

# EF_TCP_FASTSTART_IDLE=0

# EF_EPOLL_MT_SAFE=1

# server LD_PRELOAD=libonload.so

# percentile=99

#

# size mean min median max %ile stddev iter

1 1942 1725 1833 273704 4923 802 766000

2 1900 1727 1816 657833 4651 1035 783000

4 1891 1735 1817 402257 4585 849 787000

8 1860 1666 1803 223816 4601 775 800000

16 1785 1638 1718 773823 4202 1102 834000

32 1836 1661 1745 157788 4279 649 811000

64 1903 1717 1789 425864 4582 897 782000

128 2052 1860 1954 537661 5154 978 726000

256 2247 1994 2147 75286 5532 771 663000

512 2541 2240 2405 307319 6814 939 587000

1024 2642 2335 2545 24229 5824 701 565000

2048 3539 3210 3433 2107848 6482 3312 422000

4096 4558 4139 4400 443807 8669 1140 328000

8192 7038 6009 6652 2194215 13286 4967 213000

16384 10645 9503 10182 291544 18314 1944 141000

32768 15752 14786 15096 502919 22343 2251 96000

65536 29551 26566 28286 352787 40923 3319 51000

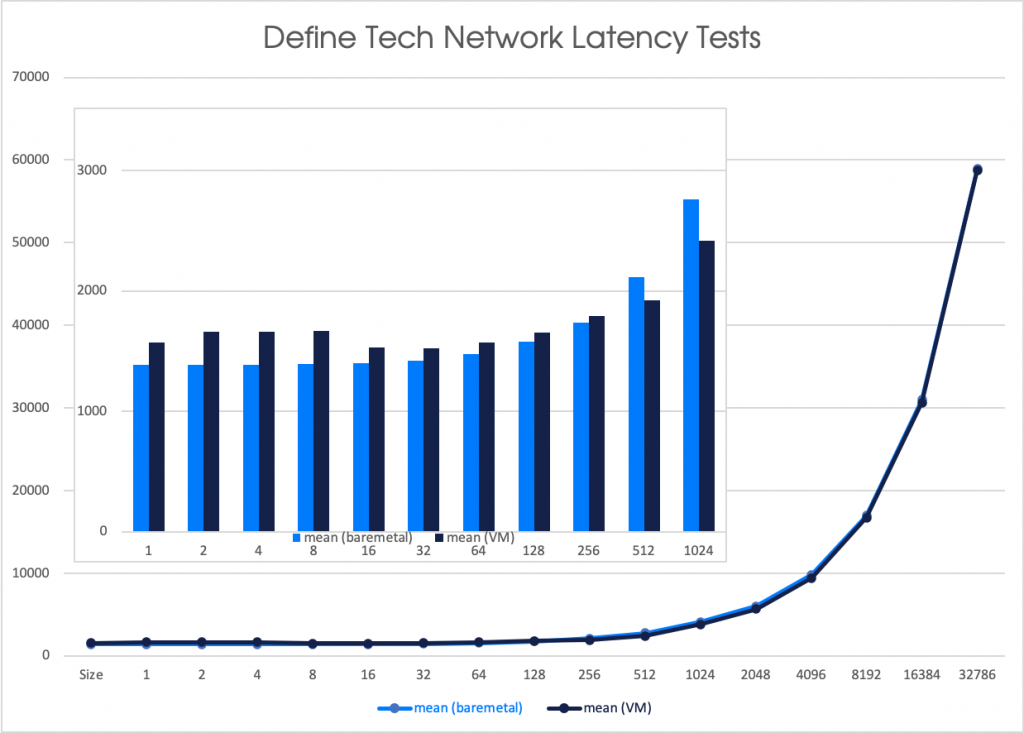

And bingo! Reasonable latency figures from a virtualised environment. When you look at the 16 byte packets sizes the results are virtually identical to the bare metal environment, although on average there is a small penalty for virtualisation but that’s a penalty many would accept for some of the function benefits that virtualisation can bring to environments (snapshots, backup, migration, rapid deployment etc.)

Now we had the process down we wanted to check and see how this scaled and performed on some higher end hardware ie. The Supermicro-based HFT boxes running Cascade Lake CPUs (6246 @ 3.3Ghz).

# updated on the LL2 cascade lake box

[root@solarflare02-ll2 src]# onload --profile=latency taskset -c 12 ./sfnt-pingpong --affinity "1;1" tcp 192.168.10.5

oo:sfnt-pingpong[27963]: Using OpenOnload 201811-u1 Copyright 2006-2019 Solarflare Communications, 2002-2005 Level 5 Networks [0]

# cmdline: ./sfnt-pingpong --affinity 1;1 tcp 192.168.10.5

# version: 1.5.0

# src: 8dc3b027d85b28bedf9fd731362e4968

# date: Thu 1 Aug 16:00:30 BST 2019

# uname: Linux solarflare02-ll2.internal 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

# cpu: model name : Intel(R) Xeon(R) Gold 6246 CPU @ 3.30GHz

# lspci: 3b:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 3b:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 3b:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 3b:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 5e:00.0 Ethernet controller: Solarflare Communications SFC9220 10/40G Ethernet Controller (rev 02)

# lspci: 5e:00.1 Ethernet controller: Solarflare Communications SFC9220 10/40G Ethernet Controller (rev 02)

# lspci: 86:00.0 Ethernet controller: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller (rev 01)

# lspci: 86:00.1 Ethernet controller: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller (rev 01)

# enp134s0f0: driver: sfc

# enp134s0f0: version: 4.15.3.1011

# enp134s0f0: bus-info: 0000:86:00.0

# enp134s0f1: driver: sfc

# enp134s0f1: version: 4.15.3.1011

# enp134s0f1: bus-info: 0000:86:00.1

# enp59s0f0: driver: igb

# enp59s0f0: version: 5.4.0-k

# enp59s0f0: bus-info: 0000:3b:00.0

# enp59s0f1: driver: igb

# enp59s0f1: version: 5.4.0-k

# enp59s0f1: bus-info: 0000:3b:00.1

# enp59s0f2: driver: igb

# enp59s0f2: version: 5.4.0-k

# enp59s0f2: bus-info: 0000:3b:00.2

# enp59s0f3: driver: igb

# enp59s0f3: version: 5.4.0-k

# enp59s0f3: bus-info: 0000:3b:00.3

# enp94s0f0: driver: sfc

# enp94s0f0: version: 4.15.3.1011

# enp94s0f0: bus-info: 0000:5e:00.0

# enp94s0f1: driver: sfc

# enp94s0f1: version: 4.15.3.1011

# enp94s0f1: bus-info: 0000:5e:00.1

# ram: MemTotal: 196463288 kB

# tsc_hz: 3366144400

# LD_PRELOAD=libonload.so

# onload_version=201811-u1

# EF_TCP_FASTSTART_INIT=0

# EF_POLL_USEC=100000

# EF_TCP_FASTSTART_IDLE=0

# server LD_PRELOAD=libonload.so

# percentile=99

#

# size mean min median max %ile stddev iter

1 1426 1398 1421 39777 1582 67 1000000

2 1428 1396 1422 18082 1584 45 1000000

4 1428 1398 1422 18566 1582 47 1000000

8 1433 1405 1427 5724 1592 43 1000000

16 1439 1412 1434 17545 1581 42 1000000

32 1455 1428 1450 6005 1601 35 1000000

64 1497 1466 1492 17158 1603 41 996000

128 1574 1541 1570 18502 1655 38 947000

256 1716 1668 1714 8920 1806 35 869000

512 1849 1800 1846 15524 1910 38 807000

1024 2441 2355 2433 22335 2626 76 612000

2048 3829 3688 3818 26569 4055 79 391000

4096 5664 5554 5650 14221 5865 60 265000

8192 9451 9321 9439 17686 9679 73 159000

16384 16701 16540 16684 19869 16972 84 90000

32768 30656 30489 30644 44469 30973 111 49000

65536 58556 58389 58540 60230 58895 93 26000

# ll2 with XtremeScale SFC9250 (however on a 10GB cable, not 25GB)

[root@solarflare02-ll2 src]# onload --profile=latency taskset -c 12 ./sfnt-pingpong --affinity "1;1" tcp 192.168.25.5

oo:sfnt-pingpong[28262]: Using OpenOnload 201811-u1 Copyright 2006-2019 Solarflare Communications, 2002-2005 Level 5 Networks [4]

# cmdline: ./sfnt-pingpong --affinity 1;1 tcp 192.168.25.5

# version: 1.5.0

# src: 8dc3b027d85b28bedf9fd731362e4968

# date: Thu 1 Aug 16:23:39 BST 2019

# uname: Linux solarflare02-ll2.internal 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

# cpu: model name : Intel(R) Xeon(R) Gold 6246 CPU @ 3.30GHz

# lspci: 3b:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 3b:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 3b:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 3b:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

# lspci: 5e:00.0 Ethernet controller: Solarflare Communications SFC9220 10/40G Ethernet Controller (rev 02)

# lspci: 5e:00.1 Ethernet controller: Solarflare Communications SFC9220 10/40G Ethernet Controller (rev 02)

# lspci: 86:00.0 Ethernet controller: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller (rev 01)

# lspci: 86:00.1 Ethernet controller: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller (rev 01)

# enp134s0f0: driver: sfc

# enp134s0f0: version: 4.15.3.1011

# enp134s0f0: bus-info: 0000:86:00.0

# enp134s0f1: driver: sfc

# enp134s0f1: version: 4.15.3.1011

# enp134s0f1: bus-info: 0000:86:00.1

# enp59s0f0: driver: igb

# enp59s0f0: version: 5.4.0-k

# enp59s0f0: bus-info: 0000:3b:00.0

# enp59s0f1: driver: igb

# enp59s0f1: version: 5.4.0-k

# enp59s0f1: bus-info: 0000:3b:00.1

# enp59s0f2: driver: igb

# enp59s0f2: version: 5.4.0-k

# enp59s0f2: bus-info: 0000:3b:00.2

# enp59s0f3: driver: igb

# enp59s0f3: version: 5.4.0-k

# enp59s0f3: bus-info: 0000:3b:00.3

# enp94s0f0: driver: sfc

# enp94s0f0: version: 4.15.3.1011

# enp94s0f0: bus-info: 0000:5e:00.0

# enp94s0f1: driver: sfc

# enp94s0f1: version: 4.15.3.1011

# enp94s0f1: bus-info: 0000:5e:00.1

# ram: MemTotal: 196463288 kB

# tsc_hz: 3366141000

# LD_PRELOAD=libonload.so

# onload_version=201811-u1

# EF_TCP_FASTSTART_INIT=0

# EF_POLL_USEC=100000

# EF_TCP_FASTSTART_IDLE=0

# server LD_PRELOAD=libonload.so

# percentile=99

#

# size mean min median max %ile stddev iter

1 1384 1314 1376 30745 1551 68 1000000

2 1386 1319 1377 16738 1550 54 1000000

4 1389 1325 1381 14021 1554 51 1000000

8 1395 1334 1388 5473 1560 50 1000000

16 1403 1340 1395 25656 1571 61 1000000

32 1421 1361 1412 8571 1594 53 1000000

64 1473 1412 1465 8125 1648 48 1000000

128 1581 1516 1572 17270 1771 55 943000

256 1736 1626 1728 15769 1943 63 860000

512 2111 1974 2103 5844 2332 65 707000

1024 2762 2636 2748 16447 3018 84 541000

2048 4118 3941 4098 17379 4424 95 364000

4096 6006 5837 5990 19417 6298 92 250000

8192 9835 9589 9817 20922 10207 125 153000

16384 16972 16732 16952 31324 17381 136 89000

32768 31007 30723 30990 41647 31396 139 49000

65536 58915 58605 58891 71188 59351 160 26000

Latency plummets to 1.3usec and even more impressive is the standard deviation (stddev col) which shows very little jitter or variance on the results. Consistent low latency performance. How will the VMs stack up against this uptake in performance?

# Results of two HFT nodes VM (same flavor as above)

[root@sfn10 src]# onload --profile=latency taskset -c 0 ./sfnt-pingpong --affinity "1;1" tcp 192.168.10.2

oo:sfnt-pingpong[20567]: Using OpenOnload 201811-u1 Copyright 2006-2019 Solarflare Communications, 2002-2005 Level 5 Networks [5]

# cmdline: ./sfnt-pingpong --affinity 1;1 tcp 192.168.10.2

# version: 1.5.0

# src: 8dc3b027d85b28bedf9fd731362e4968

# date: Tue 20 Aug 23:00:19 UTC 2019

# uname: Linux sfn10 3.10.0-957.27.2.el7.x86_64 #1 SMP Mon Jul 29 17:46:05 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

# cpu: model name : Intel Xeon Processor (Skylake, IBRS)

# lspci: 00:03.0 Ethernet controller: Red Hat, Inc. Virtio network device

# lspci: 00:05.0 Ethernet controller: Solarflare Communications XtremeScale SFC9250 10/25/40/50/100G Ethernet Controller (rev 01)

# ens5: driver: sfc

# ens5: version: 4.15.3.1011

# ens5: bus-info: 0000:00:05.0

# eth1: driver: virtio_net

# eth1: version: 1.0.0

# eth1: bus-info: 0000:00:03.0

# ram: MemTotal: 3880072 kB

# tsc_hz: 3366133080

# LD_PRELOAD=libonload.so

# onload_version=201811-u1

# EF_TCP_FASTSTART_INIT=0

# EF_POLL_USEC=100000

# EF_TCP_FASTSTART_IDLE=0

# server LD_PRELOAD=libonload.so

# percentile=99

#

# size mean min median max %ile stddev iter

1 1572 1444 1503 291943 2593 388 947000

2 1663 1577 1630 292976 2911 415 896000

4 1661 1578 1631 194620 2859 321 897000

8 1668 1577 1637 271314 2890 366 893000

16 1533 1453 1486 64385 2538 207 971000

32 1526 1468 1499 240736 2461 380 976000

64 1569 1508 1543 238612 2468 335 949000

128 1651 1586 1623 52374 2899 229 903000

256 1794 1717 1771 293475 2779 434 831000

512 1923 1827 1903 316119 2793 456 776000

1024 2417 2346 2394 52744 3193 197 618000

2048 3798 3650 3766 291841 4781 617 394000

4096 5677 5519 5643 70912 6694 335 264000

8192 9431 9249 9384 826605 10561 2068 159000

16384 16706 16502 16665 269437 17901 939 90000

32768 30649 30414 30603 181373 32231 793 49000

65536 58755 58383 58683 296877 60411 1645 26000

The latency numbers again are slightly higher but 1.6usec from a VM across the network is not a bad result at all. We were set a target of sub 2 usec and comfortably passed that with high end hardware and even managed to creep in under that with previously generation hardware as well.

For those environments that need bare metal performance (ie. trading/production), we can still treat servers as consumable resources (our OpenStack environment supports bare metal provisioning) and as demonstrated above, if you need to virtualise (test/dev) you can still achieve very high levels of performance.

If you need performance and flexibility in your private cloud environment, talk to Define Tech!

Pingback: HFT Infrastructure Management – Define Tech